The importance of interpretability in machine learning models cannot be overstated, as it sits at the crossroads of transparency, trust, and ethical AI use. As machine learning becomes increasingly integrated into critical decision-making processes across industries- from healthcare to finance- understanding how these models arrive at their conclusions is paramount.

Machine learning (ML) has recently revolutionized our daily lives and industries. It has greatly affected banking, healthcare, and transportation decision-making and predictions. Between 2018 and 2024, the global machine-learning market is projected to grow at a 42.08% CAGR.

Machine learning (ML) helps computers improve at tasks by learning from data without specific instructions. As these models get more complicated, it gets harder to understand how they make decisions.

The need for interpretability in machine learning models has increased. These computer programs need to make smart choices and explain them in a way that people can understand and trust. It's really important to simplify these programs, say the experts who want solutions that work well and are easy to understand. If you want to learn more, you can look into an AI consulting company.

So, let’s begin to learn the importance of interpretability in machine learning.

Why Does Interpretability Matter?

Understanding how the evolution of machine learning works is crucial: trust, following rules, getting better, and making smart choices. No-code machine learning solutions have become increasingly popular due to the widening AI skills gap. This trend opens up machine learning to people without any prior experience with programming or data science.

People can make, teach, and use machine learning models on these platforms without writing any code. To further understand the importance of interpretable machine learning, let's dissect the reasons. Here are the benefits of interpretable ML models.

Trust and Transparency

The successful use of interpretable machine learning models relies a lot on trust.

Trust is super important in industries like public safety, healthcare, and finance. There are computer programs called ML models that help make big decisions in these industries. But even if these models are really accurate, people might not trust them if they don't know how they work.

So, interpretability is key. It's like a window into the ML model's brain, showing how it makes decisions. When people can see how the model works, they're more likely to trust it. Plus, it helps make sure the decisions it makes make sense. So, being open about how the machine learning frameworks and models work boosts confidence and ensures better decisions.

Compliance and Ethics

Many rules for businesses say that computer systems making decisions must be fair and explain why they decide certain things. For instance, in the European Union, the GDPR has rules about this. It lets people ask for an explanation if a computer's decision affects them.

Interpretability is important for machine learning models to follow these rules. It means the models can clearly explain why they make their decisions. This is also helpful for finding, understanding, and reducing any unfairness in the models. This helps ensure AI apps and practices are fair and equal for everyone.

Debugging and Improvement

Every machine learning tools and models have limitations and possible mistakes; none are flawless. As interpretability illuminates the decision-making process, it helps engineers and developers troubleshoot and improve interpretable machine learning models.

Making a model better involves understanding why it acts the way it does and where it messes up. It's important to keep improving models so they can always do their job well. This means making them more accurate, trustworthy, and fair.

Strategic Decision-Making

Interpretability means understanding the factors that affect a model's predictions. For business leaders, this info is crucial for making smart decisions. It ensures that the model's outcomes align with the company's goals and principles.

For instance, a company can change its strategies by using the insights gained from the scope of interpretability in machine learning. This helps figure out how customers act to sell more. Knowing how machine learning works makes it a strong tool for making new things and growing a company. It also makes it more trustworthy and easier for people to use.

Methods for Enhancing Interpretability in Machine Learning Models

It's super important to make machine learning (ML) models easier to understand. This helps people get what they're all about and ensures they're clear, open, and reliable. Understanding how these models work helps people trust them more. Many different folks, like researchers, data scientists, and business folks, care about this.

| Method | Example |

|---|---|

| Feature Importance | In a credit risk prediction model, feature importance analysis reveals that credit history and income are the most significant factors influencing predictions. |

| LIME (Local Interpretable Model-agnostic Explanations) | For a specific loan application, LIME shows that the model predicted a high risk due to recent late payments and low income despite an otherwise positive credit history. |

| SHAP (SHapley Additive exPlanations) | In a medical diagnosis model, SHAP values indicate that while high blood pressure has the most significant positive impact on predicting heart disease, the interaction between high blood pressure and smoking has a negative impact, resulting in a lower overall prediction. |

| Surrogate Models | A decision tree surrogate model mimics the predictions of a deep neural network in image classification. While the surrogate model may not capture all the complexities of the neural network, it highlights that certain combinations of image features are critical for predicting specific classes. |

| Partial Dependence Plots (PDPs) | In a housing price prediction model, a partial dependence plot reveals that as the square footage of a house increases, the predicted price tends to increase linearly until a certain threshold, beyond which the marginal effect diminishes, suggesting diminishing returns on larger houses. |

Feature Importance

Feature significance methods help us figure out how much each thing we put into the model affects its guesses. They show us which parts matter the most in helping the model make decisions.

For instance, they might tell us that someone's income and how they've handled credit in the past are the most important things when the model guesses if someone is risky to give a loan to.

LIME (Local Interpretable Model-agnostic Explanations)

LIME is like a detective that helps us understand why a computer model makes certain predictions. Imagine the computer model is like a very complicated machine that predicts things. LIME simplifies this complicated machine so we can understand it better.

It changes a little bit of the information going into the machine each time, and sees how the prediction changes. This helps us understand why the computer model predicted something for a particular situation.

SHAP (SHapley Additive exPlanations)

Imagine you have a magic tool called SHAP values to understand how a machine learning model makes decisions. It looks at each piece of information the model uses (like age or income) and figures out how much each affects the final decision. But it doesn't stop there - it also looks at how these pieces of information work together.

By using SHAP values, we can see which factors are pulling the prediction in which direction. This helps us understand why the model makes certain predictions. It's like shining a light on the inner workings of the model. SHAP values give us two important things: a big-picture view of how the model works overall (global interpretability).

Surrogate Models

Surrogate models are simplified versions of really complex models. They're easier to grasp because they're not as tricky. They help us understand better how a complicated model makes decisions. This is done by training a simpler model, like a decision tree, to imitate the predictions of the complicated one. Surrogate models give us a simpler view of how the real model works. But they might miss some of the small details of the original model.

Partial Dependence Plots (PDPs)

Partial dependence plots help us understand how changing one thing in our data affects the final result while keeping everything else constant. Let's say we have a model that predicts house prices. A partial dependence plot would show us how changing just one feature, like the size of the house, affects the predicted price while keeping other factors, like location and number of bedrooms the same. This can help us see which feature is important in predicting the outcome.

Applications of Interpretability In Machine Learning Models

Machine learning is like a smart tool that helps computers learn from data and make decisions. But sometimes, it can feel like magic because we don't always know how it makes decisions. That's where interpretability comes in. It helps us understand and trust these smart tools better.

Healthcare

Interpretability is essential to improving patient care and results in the healthcare industry. Doctors and other healthcare workers need to understand how machine learning predicts illnesses, patient outcomes, and treatment suggestions. Users can also use the top healthcare apps to record a proper diagnosis and analysis.

For doctors to make a good decision, they need to know why the model says a patient has a high risk of diabetes. This way, patients can trust the AI health tools more, and doctors can check if the tools are reliable and give the right advice for treatment.

Finance

Machine learning is a big help in banking for managing risk, spotting fraud, and deciding who gets loans. Ensuring these models are easy to understand helps regulators and customers know why banks make certain choices. For example, if a bank says no to a loan, they should be able to explain why using the model's results. This makes customers feel better about the bank's decisions and follows rules like the GDPR, which says people have the right to know why decisions are made about them. Several users can also use the Fintech apps today.

Marketing

Doing well in marketing means understanding what consumers like and how they act. So, for marketers to understand why certain recommendations are made, the machine learning models predicting what consumers will do or like need to be easy to understand. When marketers grasp this, they can target digital marketing strategies better, customize customer experiences, and improve marketing strategies.

Manufacturing

Understanding machine learning interpretability help factories run better and fix problems before they happen. Manufacturers can avoid machines breaking down or production slowing down by figuring out why problems might occur and fixing them early. This saves time and money because they can focus on the exact issues causing trouble.

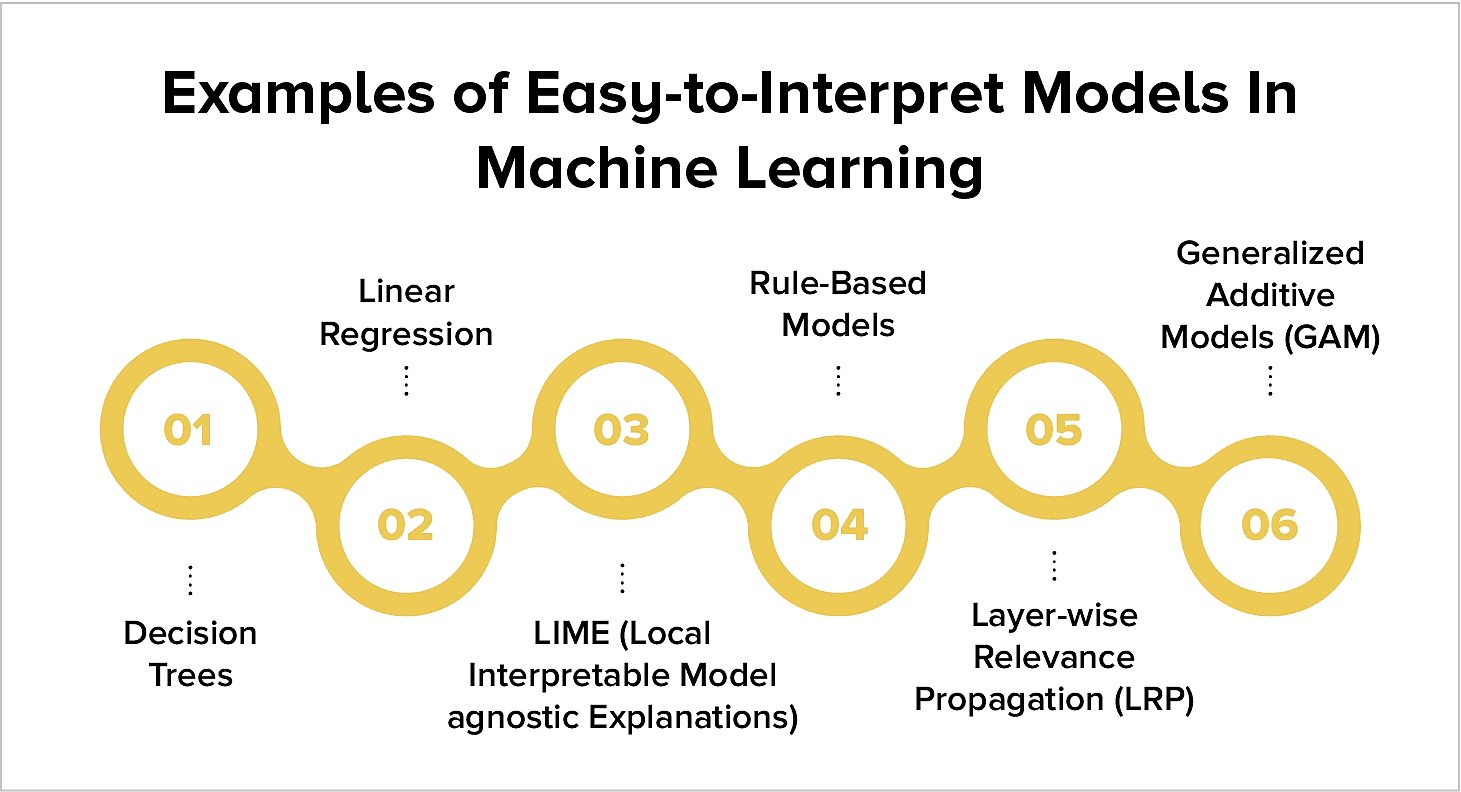

Examples of Interpretability in Machine Learning Models

Examining the importance of machine learning interpretability that provide insights into decision-making processes is necessary to comprehend interpretability in machine learning and generative AI. The models mentioned are explained here, emphasizing how each advances the overall objective of improving transparency for AI development companies. Plus, it also increases the demand for interpretability in machine learning models.

Decision Trees

A decision tree model is like a flowchart that splits data into different groups based on certain points. At the end of each path, there's a result or guess. These models are easy to understand because they look like a tree, with simple steps leading to a conclusion. Even if you're not a data expert, you can easily see how the model makes decisions, as each step is based on just one thing.

Linear Regression

Linear regression is a way to understand how one thing changes when another thing changes. It looks at how an outcome, like price, changes when factors, like size or time, change. This method is easy to understand because each number in the equation tells you exactly how much the outcome is expected to change.

LIME (Local Interpretable Model-agnostic Explanations)

The LIME technique explains how machine learning predictions work in a way that people can understand. It does this by using a simpler model to show how changes in the input data affect predictions. This makes it easier to see why the model makes specific predictions, which helps make complicated models clearer.

Rule-based Models

Rule-based models make decisions by following a set of rules, like "if this, then that". These models are easy to understand because their clear rules make them interpretable. They're handy in things like customer service bots and medical diagnosis systems where it's important to explain how decisions are made.

Layer-wise Relevance Propagation (LRP)

The LRP method explains how neural networks make predictions by tracing back the prediction through the network and giving scores to different inputs. This is really useful for deep learning models, which can seem like a "black box." It helps figure out which parts of the input were most important for the prediction.

Generalized Additive Models (GAM)

Generalized additive models (GAMs) are a type of statistical model that goes beyond simple linear models. They allow for complex relationships between different factors by using smooth functions for each variable's effect. This makes it easier to understand how each feature influences the final outcome, making GAMs interpretable.

Wrapping Up: The Vital Role of Interpretability

The market for artificial intelligence was estimated to be worth $119.78 billion in 2022. The market is expected to grow at a compound annual growth rate (CAGR) of 38.1% to $1,597.1 billion by 2030.

Knowing how AI decides things isn't just a tech thing; it's really important for trust and how well it works. When businesses and developers focus on making AI models easy to understand, they make them stronger. Plus, they make sure they're dependable and follow the rules and what's right.

As AI technology gets better, understanding how it works will be even more important. So, for anyone making or using AI, making sure it's easy to understand isn't just a good idea—it's really important for doing well and being ethical.

Frequently Asked Questions

-

Can interpretability be integrated into machine learning models?

-

How is interpretability evaluated in ML?

-

What is interpretability in machine learning?

-

What makes machine learning interpretability crucial?

-

Why is human-interpretable machine learning important?

Sr. Content Strategist

Meet Manish Chandra Srivastava, the Strategic Content Architect & Marketing Guru who turns brands into legends. Armed with a Masters in Mass Communication (2015-17), Manish has dazzled giants like Collegedunia, Embibe, and Archies. His work is spotlighted on Hackernoon, Gamasutra, and Elearning Industry.

Beyond the writer’s block, Manish is often found distracted by movies, video games, AI, and other such nerdy stuff. But the point remains, If you need your brand to shine, Manish is who you need.