Machine learning has come a long way since its humble beginnings in the early 60s. Although, what we associate with machine learning today is wholly different from what machine learning was back then, and it is all thanks to access to plenty of data and cheap storage.

The influence of big data has been profound on machine learning if it hasn’t superseded it completely, and this article tracks Machine Learning’s journey from its conception to the present day where it is one of the hottest jobs on the market.

Through it all, we’ll look at how data and storage have influenced that journey.

How It Started: The Early Days

Machine learning as a concept was first developed in 1959 by Arthur Samuel. The term describes an algorithm that learns to do a task without explicit instructions telling it how to do it.

While you might not be able to disassociate machine learning and its evolution from neural networks now, the early scientists and researchers had a completely different method of learning in mind, and it was called symbolic learning.

One of the most deadly methods due to inefficiencies and symbolic learning was AI researchers' first attempt at creating a thinking machine. It has now earned the term GOFAI (good old-fashioned artificial intelligence).

Symbolic learning is based on a core assumption - that all knowledge can be expressed through symbols and manipulating those symbols can be the basis for all reasoning and logical thought.

That's why people who perform symbolic machine learning try to painstakingly define all the relevant symbols and the rules connecting these symbols, in the hopes the machine would learn the rest of the connections between the symbols itself.

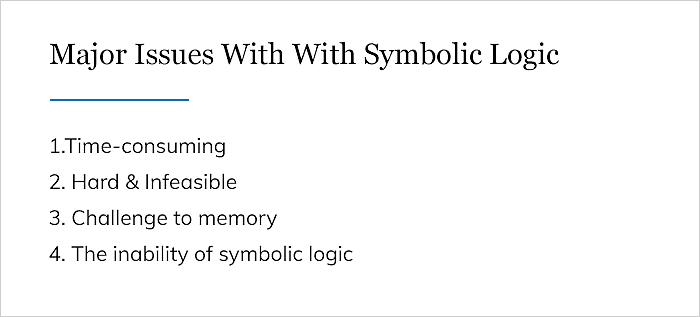

Symbolic learning faced a couple of issues early on, which still pose a significant challenge to the method:

- The inability of symbolic logic to deal with uncertainty and Bayesian inference.

- The problems with neatly classifying information into different symbols pose a serious challenge to memory and processing power even today.

- Inferring new rules are generally hard and infeasible.

- Processing data with symbolic algorithms is time-consuming.

Entering Neural Networks: The Second Stage of Machine Learning

Trying to recreate human intelligence has been a fantasy of the humankind since the Neolithic age, and the concept of recreating or emulating the brain was there before the first electronic computer was even conceived.

So it was a very natural progression that we'd try to emulate how the brain works and recreate it electronically, which is how neural networks were born. Theoretical frameworks for implementing neural networks were developed as early as 1943 when Warren McCulloch and Walter Pitts created a computational model for neural networks.

But due to theoretical limitations and computational limits, neural networks saw almost no active research until the mid-70s with a paper by Werbos that solved some of the theoretical hurdles.

Another major milestone in neural networks’ development was the field finally being able to harness the power of distributed parallel processing (which is basically how the brain functions), and this gave a huge boost to neural networks’ learning speed and viability.

Neural networks had one crucial weakness, however, they required a lot of data. Due to the fact that neural networks need significant representative data to generalize the cases and be able to handle new cases with reliable accuracy, the algorithms are useless without data.

During the 90s and the early 00s when access to data was relatively hard, these algorithms found relatively few uses.

What Happened When Big Data Took Over

While the term big data has been in use since the mid-90s, only in the late-00s the technology and expertise were widespread enough for people to make use of it.

The ease with which data could be accessed, manipulated, and processed due to better infrastructure was unprecedented in human history, and it solved the biggest weakness neural networks had - the algorithms’ need for a lot of data.

This caused an explosion in machine learning that hasn’t slowed down to date. The algorithms started being used in marketing, content analyzing, social media sentiment detection, etc.

Machine learning's impact cuts across nearly every industry, seamlessly augmenting processes and streamlining workflows. While automation naturally sparks concerns about job displacement, AI development companies are actively navigating this challenge.

JCU Online in their blog post ‘Teaching machines to learn: top machine learning applications’ covers some of the modern day examples of machine learning applications.

The Upshot

Interestingly enough, the history of machine learning can be interpreted as a struggle to deal with data. In its early years, the classification and organization of data were what stopped machine learning progress.

Then, neural networks became feasible but progressed once against almost ground to a complete halt because the algorithms didn’t have enough data to work accurately. Finally, big data came around and made many developments in machine learning possible.

We don’t know what the future will hold, but it’d undoubtedly be related to data in some form. Apart from that, if you are a machine learning enthusiast then make sure you follow MobileAppDaily on various social media platforms to never miss another trending update.

Sr. Content Strategist

Meet Manish Chandra Srivastava, the Strategic Content Architect & Marketing Guru who turns brands into legends. Armed with a Masters in Mass Communication (2015-17), Manish has dazzled giants like Collegedunia, Embibe, and Archies. His work is spotlighted on Hackernoon, Gamasutra, and Elearning Industry.

Beyond the writer’s block, Manish is often found distracted by movies, video games, AI, and other such nerdy stuff. But the point remains, If you need your brand to shine, Manish is who you need.